Despite my day-to-day being SEO, ever since Google introduced page speed as a ranking factor, I've become a bit obsessive about finding ways to squeeze every bit of performance possible out of a site.

For an unrelated analysis I've been doing (that I may also release), I collated the top 2,200 sites in the UK by organic traffic within 22 different industries.

It sprung to mind that I could utilise some direct data from CrUX alongside additional insights from the PageSpeed Insights API for the origin of all the sites and see how they correlate with each sites organic performance.

At the time of writing, the only downside is that not all tools are updated to use Google's new core web vitals metrics.

So it's currently missing some newer metrics such as CLS (cumulative layout shift) and LCP (largest contentful paint) that Google will soon use within their ranking algorithms.

But I have included the first paint, DOMContentLoaded as well as the user-centric performance metric FCP (first contentful paint) found within older CrUX tables.

I'll update the data found in this blog post with core web vitals once API's have caught up with the changes.

So, for the rest of this post, I'll be running through some key insights from the data alongside some data viz.

Site speed and organic visibility do not correlate

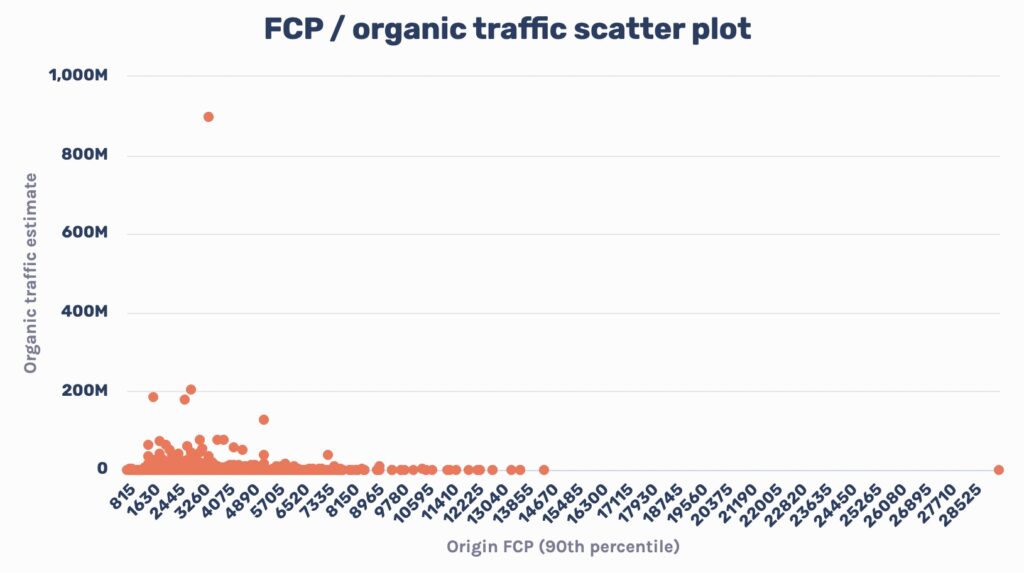

One key thing that I found was no correlation between site speed and ranking performance.

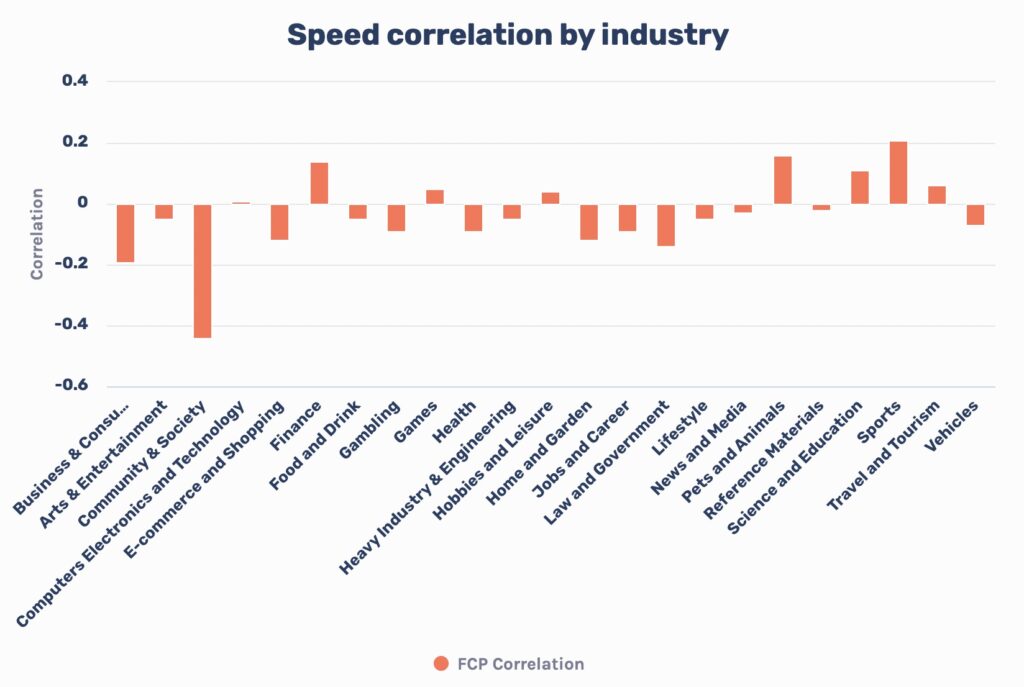

When split down by industry, the correlation still wasn't there.

Is the lack of correlation a surprise?

The correlation data above isn't something you or I should be surprised about. It was exactly what I expected the outcome to be.

Google has mentioned on multiple occasions it is a small ranking factor, and I wouldn't be focusing on site speed for the potential ranking benefit anyway.

The fact is, highly trafficked domains didn't get popular by focusing on site speed. However, providing an excellent experience for users undoubtedly helped.

Still, I'm sure every hosting company out there will still be professing the massive benefit their hosting will have on organic performance.

Now, onto more interesting data.

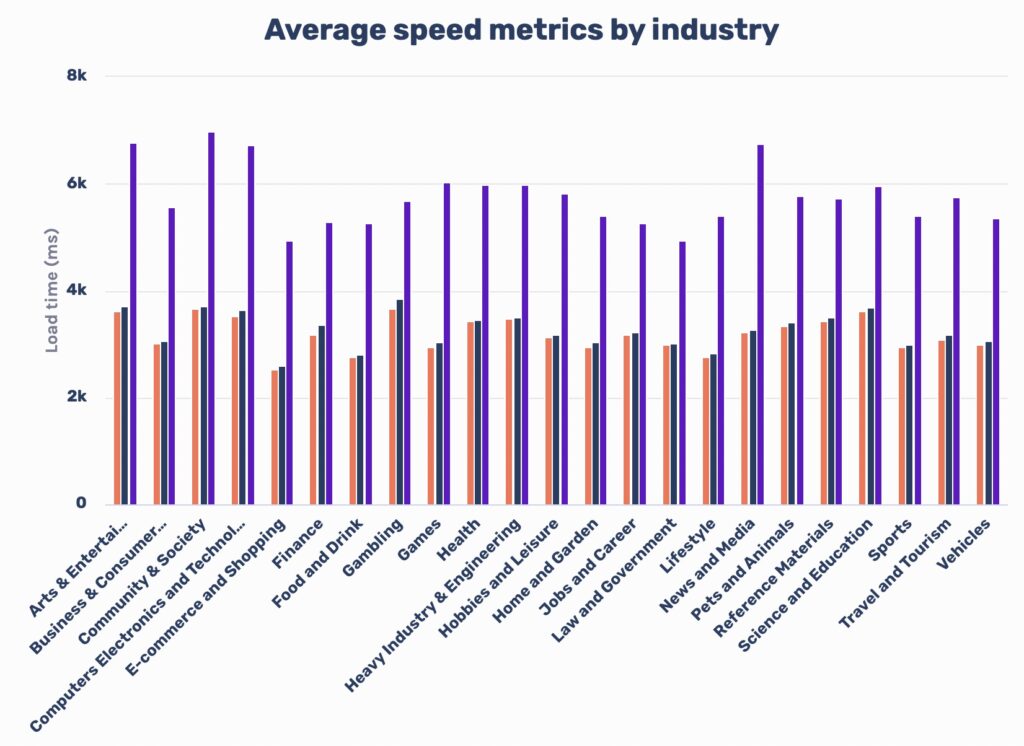

What are the average speed metrics by industry?

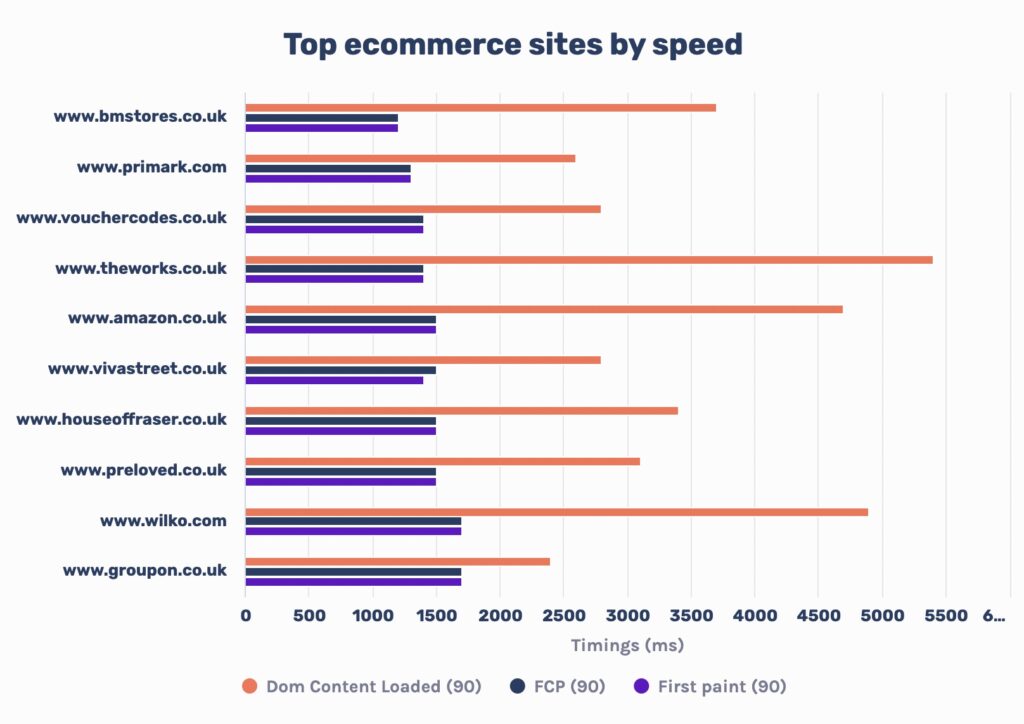

In this data, you can see ecommerce sites have the lowest first paint, FCP and DOMContentLoaded timings of all sites.

I imagine this is because ecommerce sites focus more on site speed, given they know it directly impacts their conversion rate.

As a well done to the top performers in ecommerce, here are the fastest most highly trafficked ecommerce sites:

Which industry has the slowest site speeds?

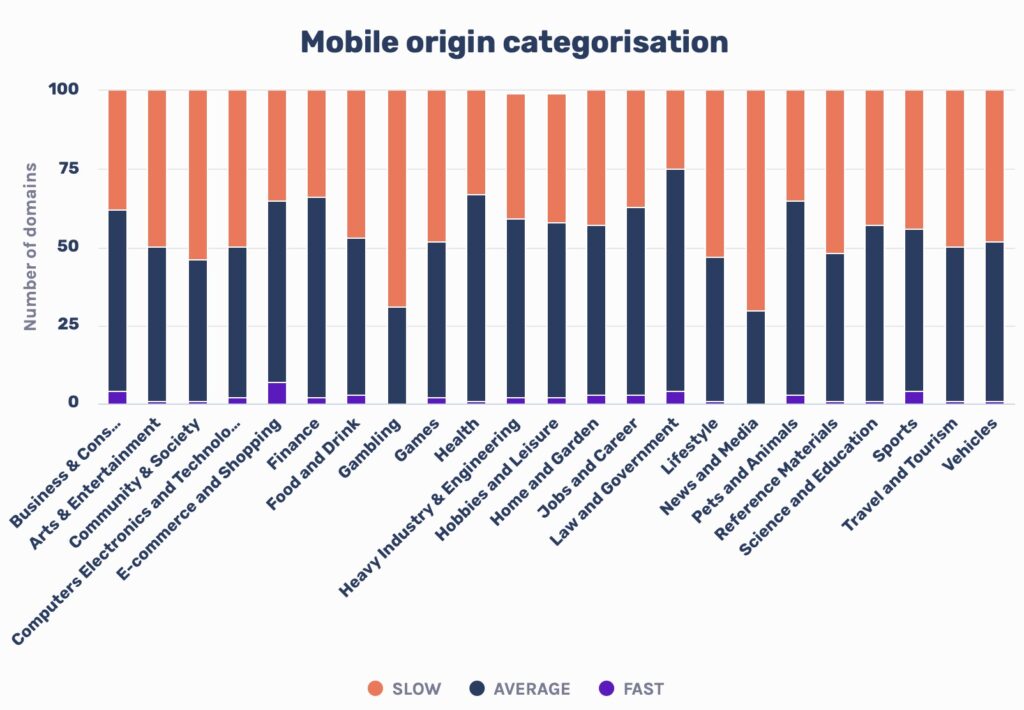

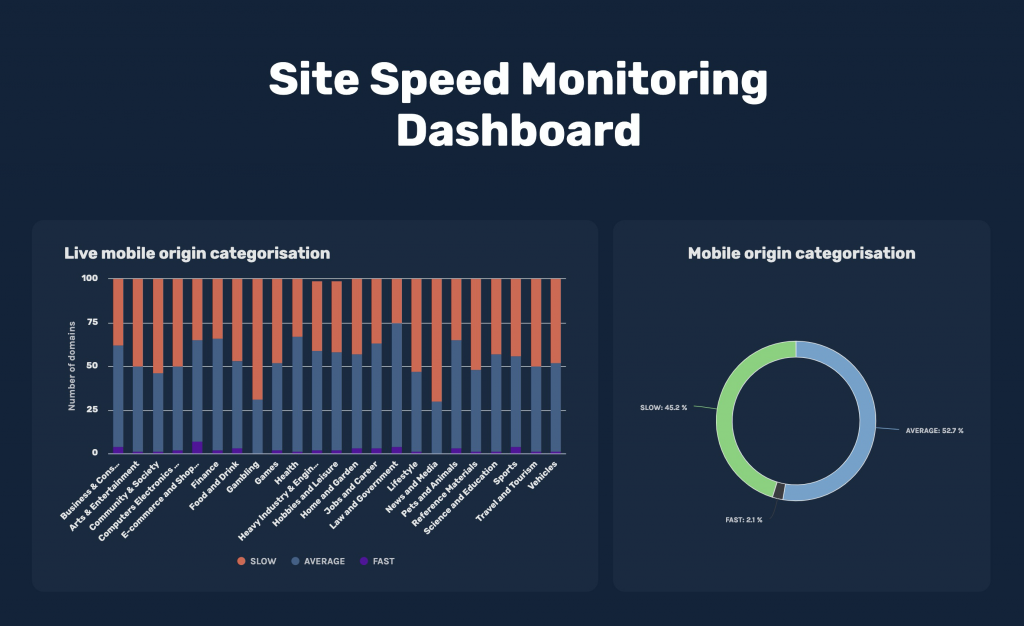

Rather than looking at averages to decide the slowest, I used the PageSpeed insights API, which gives a good overall origin score categorising sites as either slow, average or fast.

This was to prevent a really slow site from bringing down the overall average for each industry.

I've used this data to categorically say that the industry you're most likely to have a slow experience with is news and media closely followed by gambling:

What is quite interesting here is just how few mobile sites are considered fast.

Of the sites that are fast on mobile, ecommerce is again leading the way.

Whilst the bar is set relatively high via PageSpeed Insights to achieve a 'fast' site on mobile devices, I'd have hoped for more.

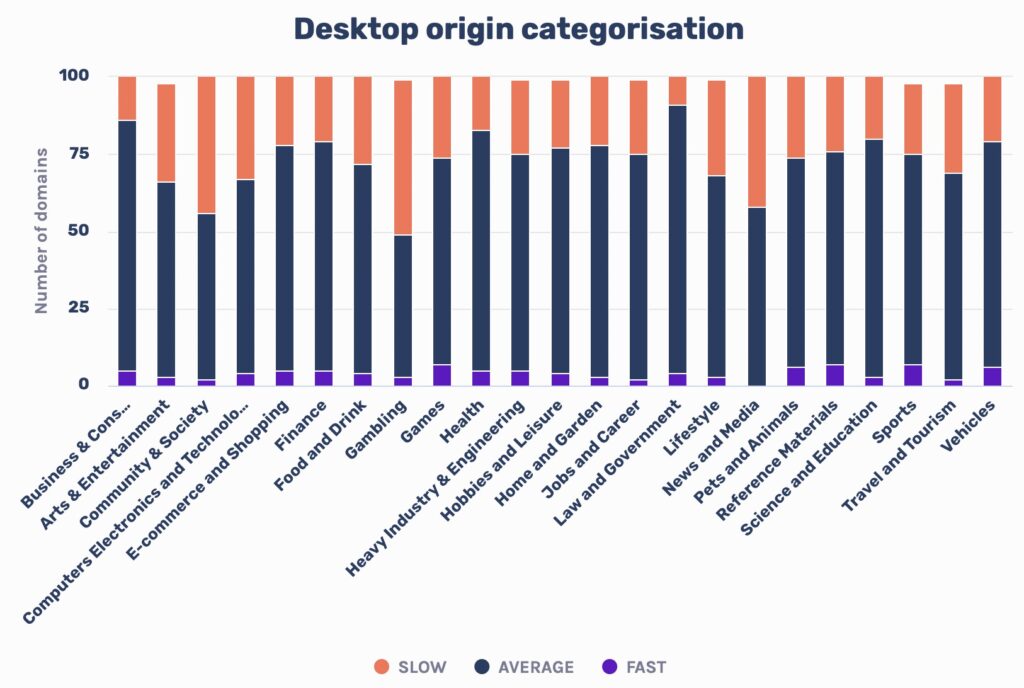

As you'd expect, we start to see fewer slow sites show up and a more significant increase of sites with an average speed on desktop.

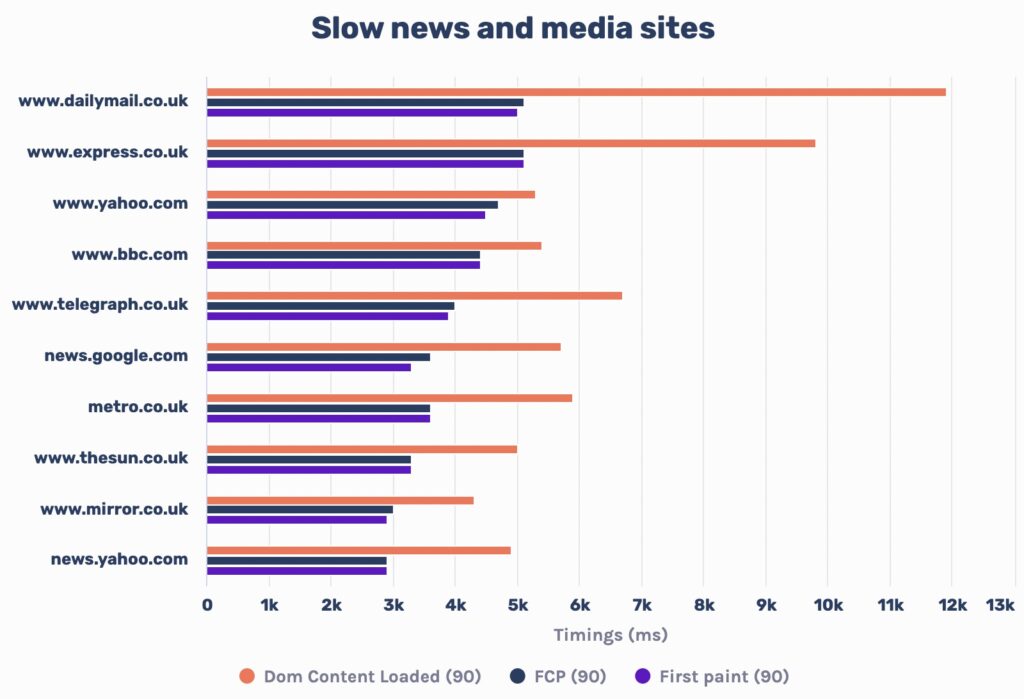

Just as we praise the fast sites, we must also highlight the slow ones. Here are the ten slowest sites in news and media weighted by how much organic traffic they receive.

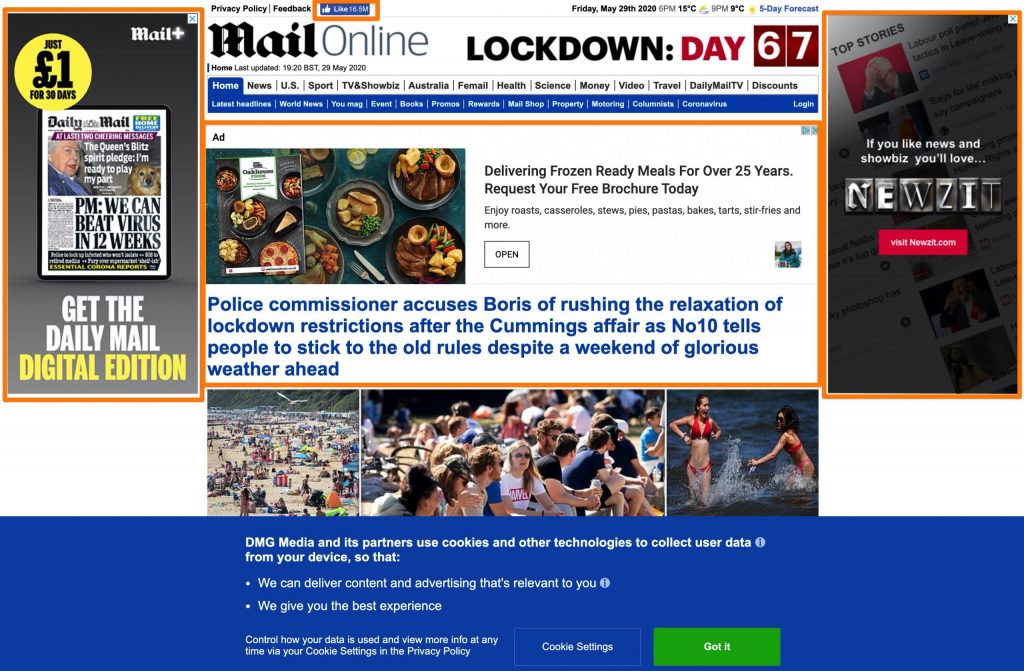

The Daily Mail tops the list as one of the most highly trafficked news sites is with an impressive average FCP of 5.1 seconds and a DOMContentLoaded time of almost 12 seconds.

What I do quite like about this data is that Google, who spend a lot of time promoting the benefits of site speed, has had their very own news domain on the list...

And the fastest sites?

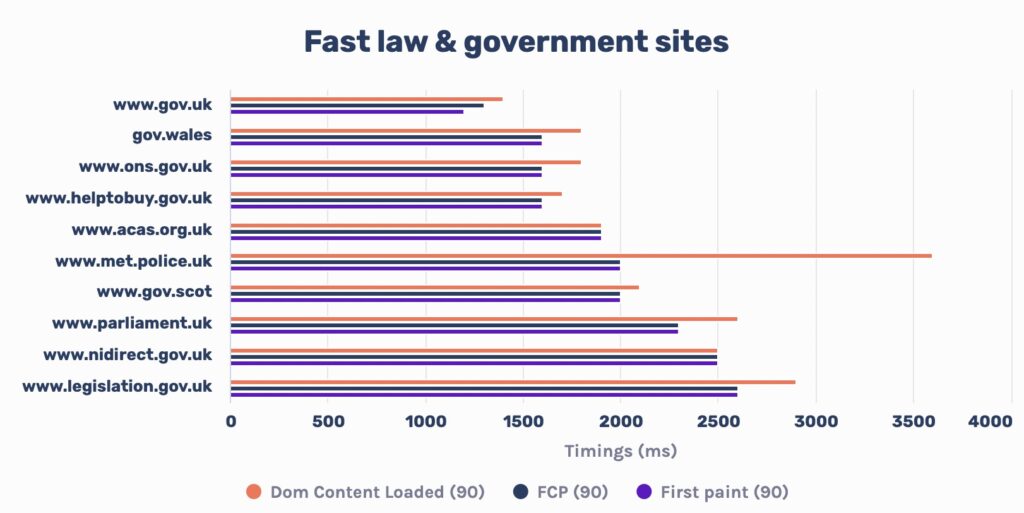

You can also see law & government sites are the fastest sites around, followed by e-commerce, which we previously saw performed well based upon average speed metrics.

Here are the load timings of the fastest sites for law & government.

It looks like gov.uk sites must have a great tech team to ensure an excellent experience for users!

The top 10 slowest sites for each industry

Next up, I delved into which sites in each industry are the main culprits for a slower site speed.

I wanted to pick out some of the larger sites within each industry, so I picked out the top 100 sites by organic traffic and then limited that data to the top 10 slowest sites (by FCP of the 90th percentile) for each industry.

Below are the results:

| Domain | Category | First paint (90) ms | FCP (90) ms | Dom Content Loaded (90) ms | Semrush traffic |

|---|---|---|---|---|---|

| www.qvcuk.com | Arts & Entertainment | 29,200 | 29,200 | 34,100 | 1,508,611 |

| www.dailymotion.com | Arts & Entertainment | 7,100 | 7,600 | 9,200 | 3,072,187 |

| www.netflix.com | Arts & Entertainment | 5,300 | 5,700 | 9,200 | 10,706,537 |

| www.metacritic.com | Arts & Entertainment | 5,600 | 5,600 | 8,800 | 787,344 |

| www.wattpad.com | Arts & Entertainment | 5,400 | 5,400 | 9,700 | 783,270 |

| www.campaignlive.co.uk | Business & Consumer Services | 5,700 | 5,800 | 8,200 | 305,980 |

| www.inc.com | Business & Consumer Services | 5,400 | 5,300 | 14,800 | 438,805 |

| www.savills.com | Business & Consumer Services | 5,200 | 5,100 | 7,300 | 239,723 |

| www.thedrum.com | Business & Consumer Services | 4,400 | 4,400 | 6,900 | 385,388 |

| www.mindtools.com | Business & Consumer Services | 4,300 | 4,300 | 12,000 | 497,194 |

The top 10 fastest sites for each industry

And as the reverse of the above, here are the top 10 fastest sites for each industry, again weighted by how much traffic they receive.

| Domain | Category | First paint (90) ms | FCP (90) ms | Dom Content Loaded (90) ms | Semrush traffic |

|---|---|---|---|---|---|

| planetradio.co.uk | Arts & Entertainment | 1,700 | 1,700 | 2,900 | 2,194,388 |

| www.digitalspy.com | Arts & Entertainment | 1,800 | 1,800 | 2,600 | 7,503,336 |

| www.discogs.com | Arts & Entertainment | 1,900 | 1,900 | 3,000 | 2,025,470 |

| www.gear4music.com | Arts & Entertainment | 1,900 | 1,900 | 3,200 | 749,849 |

| www.ultimate-guitar.com | Arts & Entertainment | 1,700 | 2,000 | 2,900 | 1,641,378 |

| www.onthemarket.com | Business & Consumer Services | 1,300 | 1,300 | 3,700 | 2,489,147 |

| www.propertypal.com | Business & Consumer Services | 1,300 | 1,300 | 2,200 | 826,748 |

| homes.trovit.co.uk | Business & Consumer Services | 1,300 | 1,300 | 2,200 | 348,549 |

| www.openrent.co.uk | Business & Consumer Services | 1,400 | 1,400 | 2,600 | 451,609 |

| www.rightmove.co.uk | Business & Consumer Services | 1,400 | 1,500 | 3,600 | 25,548,012 |

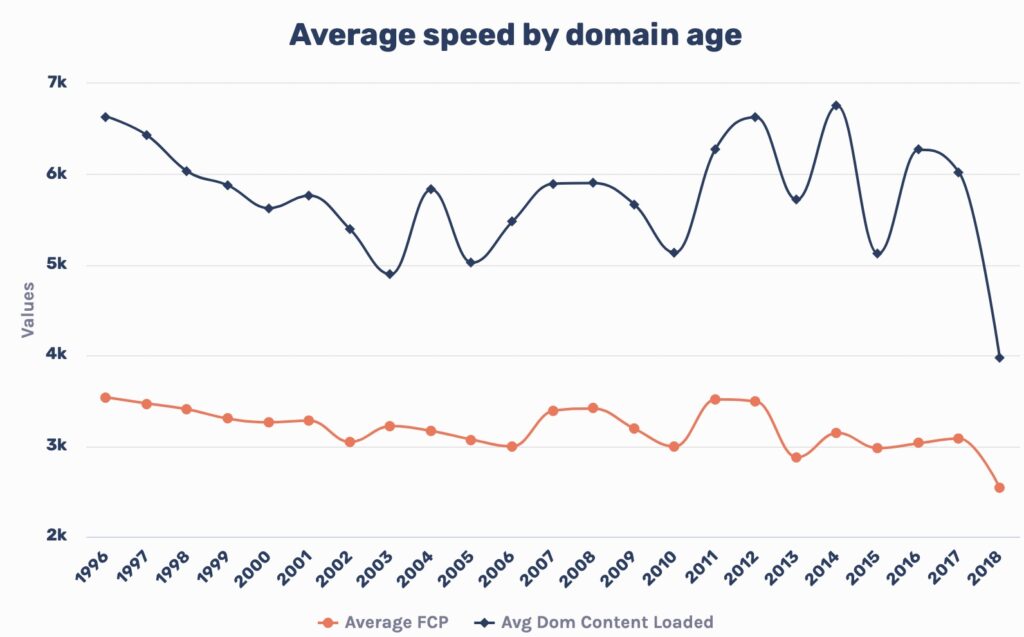

Newer sites tend to be faster

I utilised the Sistrix API, which has a handy call that specifies the age of a domain, and I found that there is a bit of a trend that newer sites tend to perform better.

Given this dataset is based upon sites that perform well organically, it shows that newer sites that perform well organically also have slightly better site speed.

This makes it seem like people are listening to Google on the importance of speed! ????

One other reason I can think of as to why this may be is older domains are more likely to have significant tech debt whilst they're stuck on older platforms.

Another is that site speed is getting easier to optimise due to plugins and CDN's that take the heavy lifting off your origin server. Newer domains that are smaller, more agile and don't suffer from tech debt can take advantage of these more quickly if they plan to from the get-go.

Whilst interesting, to concretely say newer domains tend to have a better site speed, we'd certainly need a more extensive dataset as this one is more heavily weighted towards older domains than fresh ones.

Coming soon, a CrUX dashboard

I'm planning to create a dashboard that utilises core web vitals to monitor different industries and trends monthly using the dataset I've run through in this blog post.

Given that Google will soon be using core web vitals as a ranking factor, it'll be interesting to see if sites start to improve speed because of it.

It will also be interesting if we spot an increased correlation between speed and organic traffic after the update has rolled out.

I'm currently custom coding it, but I may use Data Studio to speed up the process.

Final words

Hopefully, you've found this post interesting. It was a fun bit of analysis that I'd like to refresh in the future.

In building this dataset, I've had a few more ideas for future posts, so make sure to follow me on Twitter if you'd like to see more.